Robotic and humanoid assistants in nursing homes, to help around the house or at work are not yet commonplace in Germany. There are already numerous so-called service robots in use in other countries, though. In Japan, for example, the “Robear” has already been in use in hospitals for several years. It is controlled via a tablet and can among other tasks lift patients from the bed to a wheelchair. Robear is there to help with physically strenuous hospital activities and therefore relieve the nursing staff.

The advantage of service robots in medicine and in the health care sector is quite obvious: They don’t get tired – and therefore can take over human tasks as well as other machine jobs. But if machines are to take over everyday tasks for people and technology is generally free humans up for other things, relieve and support us, shouldn’t those assistants also recognize human emotions and properly learn to interpret them?

Answering this question is what the AI research field of affective computing is all about. Here computers are made to learn to recognize human emotion and imitate it. One aim of the research is, for example, to improve care and rehabilitation processes by means of intelligent robots that are able to understand the emotional state of a patient and respond with an emotional action corresponding to the situation. The same could apply to digital language assistants, which understand their human counterparts better thanks to affective computing learning and, through the simulation of emotions, seem more like a human.

How much robots can resemble human beings today is already quite impressive, just take a look at Sophia: Dr. David Hanson, founder of Hanson Robotics, developed Sophia with the goal of integrating human creativity and empathy in an artificial intelligence. Sophia responds to answers, can ask questions, interpret emotion and simulate about 60 human gestures. Thanks to these skills, Sophia was officially given citizenship by Saudi Arabia late last year. It’s unlikely that this could be much else than a PR stunt, because it’s unclear whether she can even claim the rights of a citizen. The importance of recognizing and interpreting emotions in effective communication between humans and AI machines is something Sophia herself pointed out in an interview: “Soft skills are important to make the right decision at the right time.”

Robotic AI systems are taking on more and more applications and helping people to make better decisions. The service robot Paul, which was developed by the Fraunhofer Institute, welcomes customers to an electronics specialist store in Ingolstadt as a shopping assistant. He asks for their product requirements and accompanies them to the shelf with the right components. So-called emotion sensitive computer systems are being tested in air traffic control. The Technical University of Chemnitz developed assistants that measure the workload of air traffic controllers and predict possible overload. It investigates the functioning and emotional stress that air traffic controllers are under. The tests take place at the "Virtual Humans" competence center at the University. So that the computer can make a reliable assessment, the system learns by analyzing many examples of body postures, facial expressions, or audio recordings related to emotions in a context. With all the data gathered, the system learns to correctly interpret signs of stress.

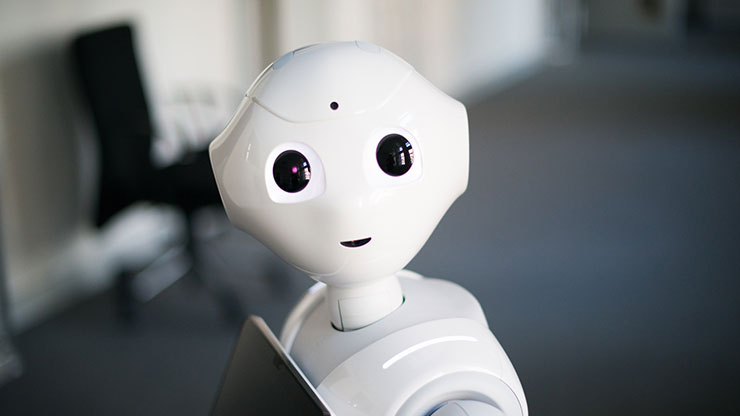

That a human-like robot isn’t necessarily met with acceptance is something scientists of the University of Koblenz-Landau, Germany, have found out. They conducted a study to identify, among other things, what factors critically influence people’s thoughts and feelings about service robots and assistants. One of the results: the less a robot resembles a human’s outward appearance, the more likely it will be accepted. The more human-like its features, the more study participants felt uneasy with the robot.

Researchers call this phenomenon the “uncanny valley”. So, while more and more people can imagine that service robots will facilitate everyday life, many are disturbed by the all too human-like exterior at the same time.

That robots with artificial intelligence can cause deep discomfort because they think for themselves, is also something that Sophia demonstrated: Once, when asked by a journalist if she wanted to destroy humanity, she simply answered “yes,” causing a stir the world over. Sophia later said she meant it as a joke. This is a great example of how important smooth and error-free human-machine communication is. Sophie might have been joking, but her humor wasn’t quite right. This is critical for more banal usage of machines used in nursing homes, treatment, education and customer service.

Artificial intelligence, i.e. self-learning computers, already accompany people in many walks of life today.

AI will change the world

Artificial intelligence, i.e. self-learning computers, already accompany people in many walks of life today.

AI will change the world

More and more people are using artificial intelligence. But few really know what AI really is.

What is artificial intelligence?

More and more people are using artificial intelligence. But few really know what AI really is.

What is artificial intelligence?